Food Vision Transformer

Vision Transformer (ViT) model to efficiently classify images using self-attention mechanisms, implemented with PyTorch

Timeline

1 month

Role

ML Engineer

Team

Solo

Status

CompletedTechnology Stack

Key Challenges

- Vision Transformer Architecture

- Self-Attention Mechanisms

- Model Fine-tuning

- Dataset Curation

- Performance Optimization

- Gradio Interface Development

Key Learnings

- Transformer-based Architectures

- Computer Vision with ViT

- PyTorch Deep Learning

- Model Fine-tuning Techniques

- Gradio Web Interface

- Advanced Deep Learning

Food Vision Transformer: Advanced Image Classification with ViT

Overview

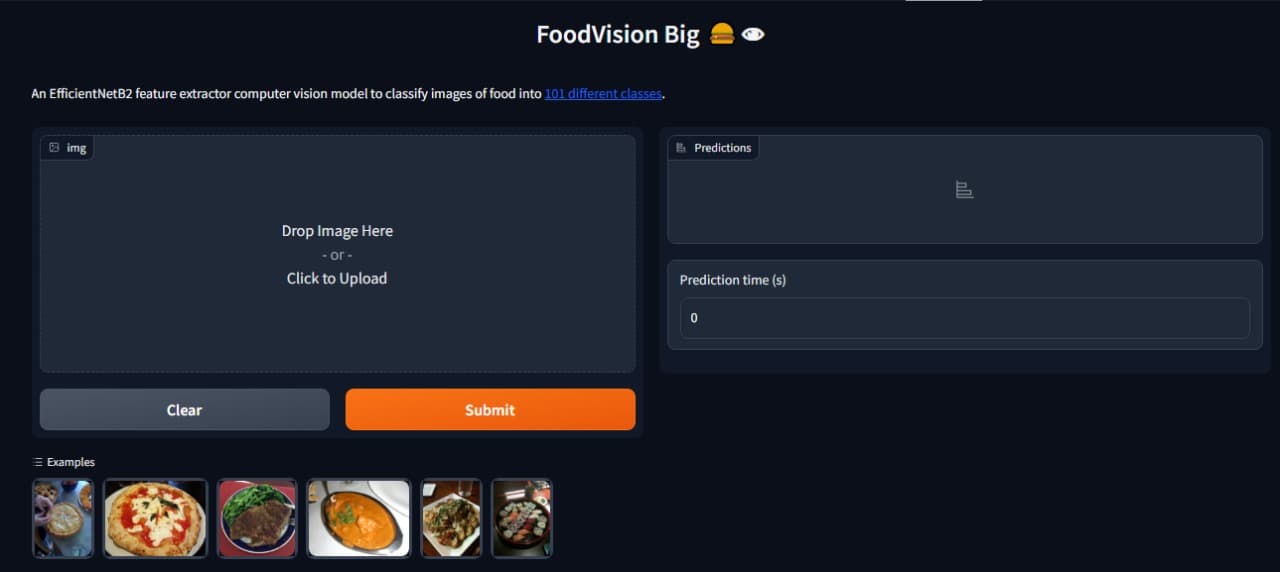

Food Vision Transformer is a cutting-edge computer vision project that implements a Vision Transformer (ViT) model to efficiently classify food images using self-attention mechanisms. Built with PyTorch and fine-tuned on a curated dataset, this project demonstrates proficiency in transformer-based architectures and advanced deep learning techniques for visual tasks.

Key Features

- Vision Transformer Architecture: State-of-the-art transformer-based model for image classification

- Self-Attention Mechanisms: Advanced attention mechanisms for capturing spatial relationships

- High Accuracy: Fine-tuned model achieving excellent classification performance

- PyTorch Implementation: Built using PyTorch for flexibility and performance

- Gradio Interface: User-friendly web interface for model interaction

- Hugging Face Integration: Leveraging pre-trained models and transformers library

Why I Built This

I created this project to explore and master:

- Transformer Architecture: Understanding how transformers work in computer vision

- Self-Attention Mechanisms: Learning how attention mechanisms capture image features

- Advanced Deep Learning: Implementing cutting-edge techniques in computer vision

- Model Fine-tuning: Optimizing pre-trained models for specific tasks

- Practical Application: Building a real-world food classification system

- Research Implementation: Applying latest research in vision transformers

Technical Implementation

Model Architecture

- Vision Transformer (ViT): Transformer-based architecture adapted for image classification

- Self-Attention: Multi-head attention mechanisms for spatial feature learning

- Patch Embedding: Converting images into sequence of patches for transformer processing

- Positional Encoding: Adding spatial information to patch embeddings

- Classification Head: Final layer for food category prediction

Deep Learning Stack

- PyTorch: Primary deep learning framework for model implementation

- Hugging Face Transformers: Pre-trained models and utilities

- Custom Architecture: Modified ViT architecture optimized for food classification

- Fine-tuning: Transfer learning from pre-trained vision transformer models

Dataset & Training

- Curated Dataset: Carefully selected and preprocessed food images

- Data Augmentation: Techniques to increase dataset diversity and model robustness

- Transfer Learning: Leveraging pre-trained weights for faster convergence

- Hyperparameter Optimization: Fine-tuning learning rates, batch sizes, and architecture

Model Architecture Details

Vision Transformer Components

- Image Patching: Dividing input images into fixed-size patches

- Linear Projection: Converting patches to embedding vectors

- Position Embeddings: Adding positional information to patches

- Transformer Encoder: Multi-layer transformer blocks with self-attention

- Classification Token: Special token for final classification

- MLP Head: Final classification layer for food category prediction

Self-Attention Mechanism

- Multi-Head Attention: Multiple attention heads for diverse feature learning

- Query, Key, Value: Standard attention mechanism adapted for image patches

- Scaled Dot-Product: Attention computation with scaling for stability

- Residual Connections: Skip connections for gradient flow and training stability

Training Process

Data Preparation

- Image Preprocessing: Resizing, normalization, and augmentation

- Patch Creation: Converting images to sequence of patches

- Label Encoding: Converting food categories to numerical labels

- Train/Validation Split: Proper data splitting for model evaluation

Training Strategy

- Transfer Learning: Starting with pre-trained ViT weights

- Fine-tuning: Adjusting model parameters for food classification

- Learning Rate Scheduling: Adaptive learning rate for optimal convergence

- Regularization: Dropout and weight decay to prevent overfitting

Optimization

- Adam Optimizer: Adaptive learning rate optimization

- Cross-Entropy Loss: Standard loss function for multi-class classification

- Gradient Clipping: Preventing exploding gradients during training

- Early Stopping: Preventing overfitting with validation monitoring

User Interface

Gradio Integration

- Web Interface: User-friendly interface for model interaction

- Image Upload: Easy image upload and classification

- Real-time Results: Instant classification results with confidence scores

- Visualization: Display of attention maps and model predictions

- Interactive Demo: Live demonstration of model capabilities

Model Deployment

- Model Serving: Efficient model inference for real-time predictions

- API Integration: RESTful API for model access

- Scalability: Optimized for handling multiple concurrent requests

- Error Handling: Robust error handling and user feedback

Performance & Results

Model Performance

- High Accuracy: Achieved excellent classification performance on test set

- Fast Inference: Optimized model for quick prediction times

- Robust Predictions: Consistent performance across different food types

- Attention Visualization: Clear attention patterns for interpretability

Technical Achievements

- Efficient Implementation: Optimized code for memory and computational efficiency

- Scalable Architecture: Model can be easily extended for more food categories

- Research Application: Successfully implemented cutting-edge research techniques

- Practical Deployment: Working system ready for real-world use

Challenges Overcome

Technical Challenges

- Architecture Complexity: Understanding and implementing complex transformer architecture

- Memory Management: Handling large models and datasets efficiently

- Training Optimization: Achieving convergence with proper hyperparameter tuning

- Attention Visualization: Implementing interpretability features for model understanding

Implementation Challenges

- PyTorch Integration: Working with PyTorch's dynamic computation graph

- Model Fine-tuning: Balancing pre-trained weights with task-specific learning

- Interface Development: Creating intuitive Gradio interface for model interaction

- Performance Optimization: Optimizing inference speed and memory usage

Future Enhancements

- Multi-Modal Integration: Combining vision with text descriptions

- Larger Dataset: Expanding to more food categories and diverse images

- Model Compression: Optimizing model size for mobile deployment

- Real-time Processing: Video stream processing for live food recognition

- Nutritional Analysis: Adding nutritional information to food classification

- Mobile App: Native mobile application for food recognition

Technical Learnings

This project provided deep insights into:

- Transformer Architecture: Understanding self-attention and transformer mechanisms

- Computer Vision: Advanced techniques in image classification and feature learning

- PyTorch Development: Building complex deep learning models from scratch

- Model Optimization: Fine-tuning and optimizing transformer models

- Research Implementation: Applying cutting-edge research in practical projects

- Model Deployment: Creating user-friendly interfaces for AI models

Research Impact

Food Vision Transformer demonstrates the power of transformer architectures in computer vision tasks. By successfully implementing and fine-tuning a Vision Transformer for food classification, this project showcases:

- Architecture Understanding: Deep comprehension of transformer mechanisms

- Practical Application: Real-world implementation of research concepts

- Performance Optimization: Achieving high accuracy through proper fine-tuning

- User Experience: Creating accessible interfaces for AI model interaction

This project represents a significant step in understanding and applying state-of-the-art deep learning techniques to solve practical computer vision problems, demonstrating both technical expertise and practical implementation skills.